Class page maintained by D. Odden (readings and additional information)

Generative phonology has long relied on elaborate computational and representational machinery attributed to Universal Grammar, including many putative universals of physical substance. The goal of phonological theory has previously been to articulate a very rich innate component which minimizes how much is learned in a language. There is a contrary generative trend, which may be identified as the Formal Phonology program, to the effect that the content of UG must be minimized, adhering to the principle that everything is learned unless it cannot possibly be learned. This view of phonology is consistent with and ultimately impelled by analogous logical concerns in syntax within the Minimalist Program. This course investigates the principles of a minimalist theory of phonology, and compares itself to SPE theory, autosegmental phonology and OT.

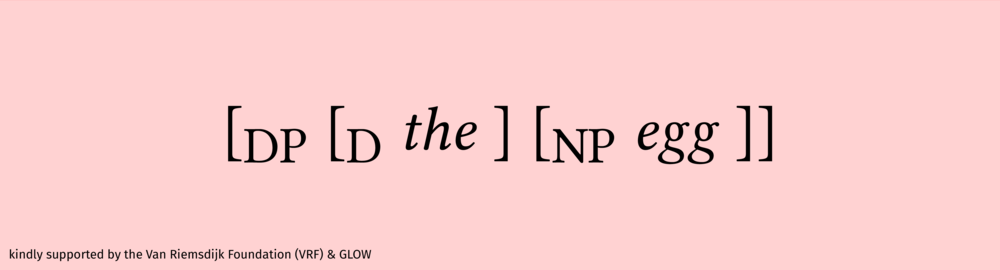

A foundational concern in this program is what phonological theory is about. As argued in Hale & Reiss (2008), it is about the phonological component of grammars, and not sound patterns, data, or the behavior of speakers, listeners or children. The classical claim of generative phonology, which maintains the competence-performance distinction, is that there is a specific computational device which allows speakers of a language to produce and interpret utterances. Correspondingly, the two main concerns in FP are “what is the nature of a representation?” and “what is the nature of the computation?”, which leads to the corollary question “how are theories evaluated?”. We select the simplest system of concepts which are justified by observable fact, thus Occam’s Razor is the fundamental logical principle of theory evaluation in FP. The second logical axiom of the theory is that any theoretical concept must be justified by proving that the concept explains facts – concepts are not stipulated ad libitum.

On the technical side, we investigate certain questions that exemplify the approach. First, what information about representations must be in UG? Does UG require a list of the features that are accessible to grammars; do features have substantive (phonetic) properties? Can features be learned inductively, and if so, how? We will see how features can be learned inductively exclusively by reference to the primary linguistic data, as long as we also have a definite theory of the syntax of features and the syntax of computations. Then we need to know, what is the system of computations, and what is their form? Do computations modify existing objects, or do they evaluate possible objects? If they modify objects, do they change a single sub-object in a representation, or do they simultaneously change multiple objects? Can computations be reduced to a few general concepts that can be symbolically represented – can computations be formalized?

In proposing and evaluating a technical theory of phonological objects and computations, we must have an integrated view of the concepts of phonology and their interpretation, meaning that we need an ontology of phonology. For example, claims of logical superiority of certain theories of computation have made based on the fact of positing only the concept “constraint”, rather than the two concepts “rule” and “constraint”. But this argument is invalid, because “constraint1” is not the same thing as “constraint2”, and the entire set of concepts required for the constraint-only theory is much richer than those required for the “rule and constraint” theory. With a fully-specified ontology of phonological theories, it becomes meaningful to compute the overall simplicity of competing theories.