slides day 1 day 2 day 3 day 4

Phonology is made of melody and structure. The former lives below (features, Elements), the latter at and above the skeleton (in a regular autosegmental representation). What is called substance are phonetic properties in phonological items, such as labiality in the feature [labial]. In regular approaches, substance is a property of items below, the skeleton, but not of those living at and above the skeleton: there is no phonetics in an onset, an x-slot, a prosodic word, a grid mark, a foot etc. These items don’t even have a phonetic correlate: there is no way to pronounce an onset, a prosodic word etc., and they do not contribute any identifiable phonetic property to what is pronounced.

Substance-free phonology (SFP) holds that any substance which is present in current approaches needs to be removed from phonology and relocated elsewhere, i.e. in the phonetics. This is why to date work on SFP only ever concerns melody: there is nothing to be removed from items at and above the skeleton because they have no phonetic properties.

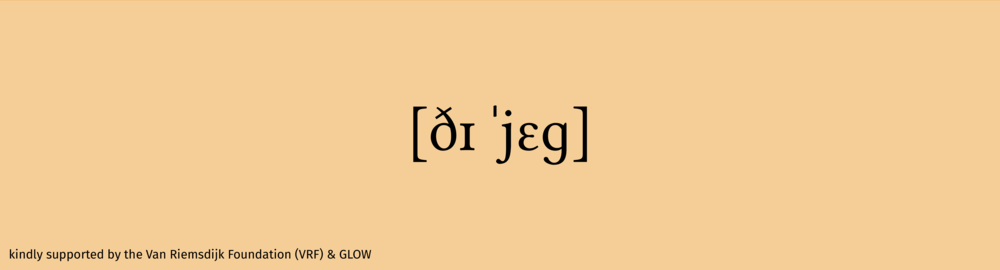

The class inquires on the consequences of SFP on substance-free phonology, i.e. on the items at and above the skeleton that do not have any substance anyway. SFP has a number of consequences, among which the necessary existence of a spell-out mechanism that relates substance-free phonological primes (α, β, γ) with phonetic correlates (labiality, voicing, etc.). Substance-free phonological primes are arbitrary by definition, in the sense that they are interchangeable: there is no reason why α, rather than β, O or “banana” is associated to, say, labiality.

Items at and above the skeleton are not interchangeable, though: there is no way to replace an onset with a nucleus. This is because items at and above the skeleton have stable cross-linguistic properties: nuclei host vowels, not consonants (except for syllabic consonants on a certain analysis), rhymes form a unit with the onset preceding, not following them, etc. By contrast, substance-free melodic primes do not have any such cross-linguistically stable properties: there is nothing that α has intrinsically, which β lacks, or the reverse. The whole purpose of substance-free melodic primes is precisely to be colourless.

How then does the arbitrary world below the skeleton produce a non-arbitrary world at and above the skeleton? This appears to be a contradiction in terms, an equation without possible solution.

The class breaks down this hiatus between the two worlds that is introduced by SFP into a number of more local questions where modularity is a guiding light. The key insight (which is so trivial that it is usually never mentioned) is that all items at and above the skeleton are emanations of sonority, i.e. the result of a modular computation based on sonority primes (syllabification algorithm). Place and Laryngeal properties do not contribute anything to the construction of upper items. Therefore the SFP-introduced hiatus boils down to one concerning only sonority primes: since the structure they produce through computation is not arbitrary, they are themselves not arbitrary. That is, sonority primes are innate (children are born with primes associated to phonetic correlates), while Place and Laryngeal primes are not (they are emergent: children build them according to environmental information only).

Based on this fundamental split where phonology is not a single computational system but rather a cover term for three distinct modules (Son, Place, Lar), non-trivial questions arise: how does multiple-module spell-out and segment integrity work, i.e. how is the information of three distinct modules mapped onto a single phonetic item that we call a segment? What exactly is spelt out (primes, skeletal slots, constituents, …)? Given a surface alternation, how can the analyst distinguish effects of phonological computation from effects due to spell-out?

The class closely follows my freshly published article 3xPhonology, made available in the reading list below. Most other items below relate to sonority: what it is, what its phonetic correlate is, whether it’s innate. One item, Scheer (2016), is about the massive empirical evidence for the segregation of items below vs. at and above the skeleton.

Berent, Iris 2013. The Phonological Mind. Cambridge: CUP. pdf

Berent, Iris 2013. The Phonological Mind. Trends in Cognitive Sciences 17: 319-327. pdf

Clements, George 2009. Does sonority have a phonetic basis? Contemporary views on architecture and representations in phonological theory, edited by Eric Raimy & Charles Cairns, 165-175. Cambridge, Mass.: MIT Press. pdf

Harris, John 2006. The phonology of being understood: further arguments against sonority. Lingua 116: 1483-1494. pdf

Ohala, John 1992. Alternatives to the sonority hierarchy for explaining segmental sequential constraints. Papers from the Parasession on the Syllable, Chicago Linguistic Society, 319-338. Chicago: Chicago Linguistic Society. pdf

Parker, Steve 2017. Sounding out sonority. Language and Linguistics Compass 11: e12248. pdf

Scheer, Tobias 2016. Melody-free syntax and phonologically conditioned allomorphy. Morphology 26: 341-378. pdf

Scheer, Tobias 2019. Sonority is different. Studies in Polish Linguistics 14 (special volume 1): 127-151. pdf

Scheer, Tobias 2019. Phonetic arbitrariness: a cartography. Phonological Studies 22: 105-118. pdf

Scheer, Tobias 2022. 3xPhonology. Canadian Journal of Linguistics. pdf

Stilp, Christian E. & Keith R. Kluender 2010. Cochlea-scaled entropy, not consonants, vowels, or time, best predicts speech intelligibility. PNAS 107: 12387-12392. pdf